Overview

The EEF’s programme grant funding generates new evidence to enhance our understanding of what’s most likely to be effective in improving attainment and related outcomes, especially for the most disadvantaged pupils. As confidence increases in a programme’s impact, we seek to scale up its delivery, whether in schools, nurseries or colleges.

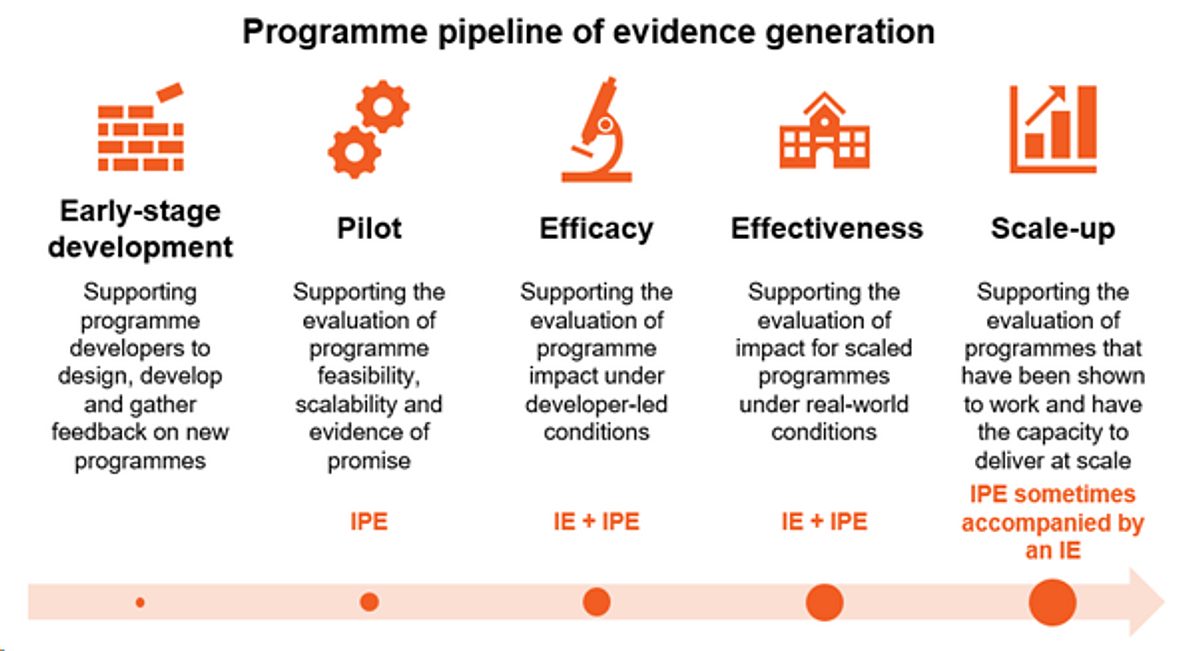

The term ‘pipeline’ is used to describe the progression of evidence we generate. We commission teams of independent evaluators to conduct implementation and process evaluations (IPE) and impact evaluations (IE) across the pilot to scale-up stages of the pipeline (see figure below). Since 2021, we have added an early-stage programme development (ESPD) stage to the pipeline to support programme developers to design, incubate and develop new programmes that address a specific evidence and practice gap. These programmes have a formative feedback and reflection component conducted by the programme developers and are not independently evaluated.

The figure below describes our pipeline of evidence generation and the corresponding research design:

EEF use criteria to inform decisions on which programmes are suitable for specific stages of evaluation. We run open funding rounds for programmes at ESPD (both ‘innovation’, the development of brand new programmes and ‘development’ the refinement of early stage programmes), pilot, efficacy and effectiveness stages. We do not invite open applications for scale-up evaluations. Programmes considered for scale-up evaluations would have positive results from a previous EEF-funded impact evaluation.

Early-Stage Programme Development (innovation)

- Relevance of the proposed programme. The organisation has promising ideas for a brand-new programme (i.e. it can’t already be purchased and hasn’t previously been tested in more than one setting).

- Understanding of the evidence. The application demonstrates a good understanding of the existing evidence in at least one of the following areas: teaching and learning, professional development, or implementation.

- Alignment to EEF’s mission. The organisation presents a convincing argument about (1) how their programme will benefit disadvantaged pupils, and/or (2) how disadvantaged pupils are a key area of focus for their organisation.

- Capacity and experience of the project team. The team have experience in delivering similar projects (e.g. developing new programmes, delivering professional development to practitioners, or creating programme materials) and have appropriately allocated the work across team members.

Pilot (also applies to ‘development’ projects that form part of early-stage programme development)

- Fit with portfolio. The programme aligns with our strategic priorities and addresses a gap in the evidence, but few programmes in this area have been rigorously evaluated and no other similar programmes are ready for trial.

- Scale of delivery. The programme has been delivered in a small number of schools or within a group of schools (such as one MAT). The programme has the potential to be delivered at scale as an efficacy or effectiveness trial in the future (involving roughly 50 or more schools).

- Capacity and experience. The delivery team currently has the capacity and experience to deliver to a small number of schools — around 15 to 20. The team is prepared to build capacity to scale the programme, if it were to progress to trial.

- Level of development. Programme activities and materials are codified but may not be fully developed and have not been adequately tested.

- Feasibility of implementation. There is some uncertainty around feasibility (which includes acceptability), but the concerns are not severe.

- Evidence for the Theory of Change. There is some evidence supporting the Theory of Change but further evidence is needed to understand whether the causal assumptions are likely to hold.

- Programme differentiation. The programme activities are sufficiently distinct from existing practice.

Efficacy and effectiveness

- Evidence of promise. Clear Theory of Change suggesting an impact on attainment would be seen. There is a clear distinction to existing practice, and supporting evidence for the programme’s Theory of Change mechanisms demonstrated through intermediate outcomes (e.g. indicative change in teacher practice or pupil engagement). There is positive evidence, often quantitative, from prior evaluations of the programme or very similar approaches, ideally with well matched or randomised control groups. Programmes may be considered to be tested at effectiveness level where the prior level of evidence is very high e.g. prior rigorous positive RCT evidence.

- Scalability. The programme is well defined and developed, been shown to be feasible and attractive to schools/settings, has been delivered previously and capacity is in place to deliver to settings in a trial (50+ settings). We also assess the potential for the programme to be delivered at a larger scale in the future. Programmes may be considered to be tested at effectiveness level where the scale of delivery is already large and the model to be tested is already suitable for national scale without adjustments.

- Alignment with EEF mission. We assess how well the programme fits the EEF’s identified areas of interest in our current Research Agenda, if the programme addresses a gap in evidence and/or practice, relevance to schools/settings and policy makers, and the rationale for the impact for socio-economically disadvantaged children/potential to close the attainment gap (as well as specific experience of running in schools with a higher than average proportion of socio-economically disadvantaged children).

Specific criteria are applied to inform the decisions on which programmes are to progress along the pipeline based on the findings of the EEF-funded evaluation as well as other sources of information.

Progression to pilot or efficacy

Similar criteria and factors are considered for progressing programmes to pilot or efficacy.

- Evidence of Promise. Evidence in support of the programme’s ToC, for example, the pilot evaluation has captured changes in teaching practice or pupil engagement. We also consider whether there is potential and/or additional evidence that the programme may help to close the attainment gap.

- Feasibility of Implementation. The programmme was well implemented and received by settings and pupils, and delivered as intended. The programme is considered affordable to settings. The intervention content and implementation approach is acceptable to teachers or practitioners.

- Readiness for trial. The programme is well codified with no need for substantial development work. The programme can be scaled for delivery to around 50 schools, and the developer has sufficient capacity to deliver the programme with fidelity in a trial.

Progression to effectiveness and scale-up

The same criteria and factors are considered for progressing programmes from efficacy to effectiveness stage as for progressing from effectiveness trial to scale-up. These are listed below.

- Effect size. At least one month’s additional progress on the primary outcome. Also considered are secondary outcome results, width of confidence intervals, compliance analysis results, type of outcome tests used.

- Potential impact for pupils eligible for Free School Meals (FSM). There should be no evidence that pupils in receipt of FSM have been disadvantaged by the intervention compared to their peers, and we prioritise programmes where there is indication that disadvantaged pupils may benefit more from the programme than their peers (potential to close the attainment gap).

- Cost. The cost of the programme relative to the impact is within a threshold value, which is set and regularly reviewed by the EEF.

- Implementation. The programme was implemented with good levels of compliance and fidelity.

- Context. The programme is still serving an area of interest for EEF and fits with current school/setting and policy priorities.

- Security of findings. The impact result is secure (3 padlocks or above). We may consider re-trialling a programme at the same stage if there is indication of promise but the findings of the evaluation are of too low security to interpret this finding fully.

- Scalability. The programme developer has viable plans to scale the programme. Consideration of any changes to the programme/delivery model required for scale in relation to the observed impact.

Programme re-granting decisions are made bi-annually, aligned with our open application rounds. We review the portfolio of programmes with recent available peer-reviewed evaluation results and assess how well they meet the defined criteria, as well as overall consideration of prioritisation for EEF. For programmes at the EPSD stage, progression is based on the EEF’s assessment of the formative data gathered by the programme developers. Decisions on whether a programme progresses up the pipeline often involves scoping discussions with programme developers. Programmes identified for re-granting then go through an approval process by EEF’s Grants Committee. If successful, we look to set up the evaluation, following our standard commissioning process, with timings aligned to the research agenda open funding round where possible. However, the timing for project set up will depend on EEF’s internal capacity and priorities, as well as the programme developer’s preference.